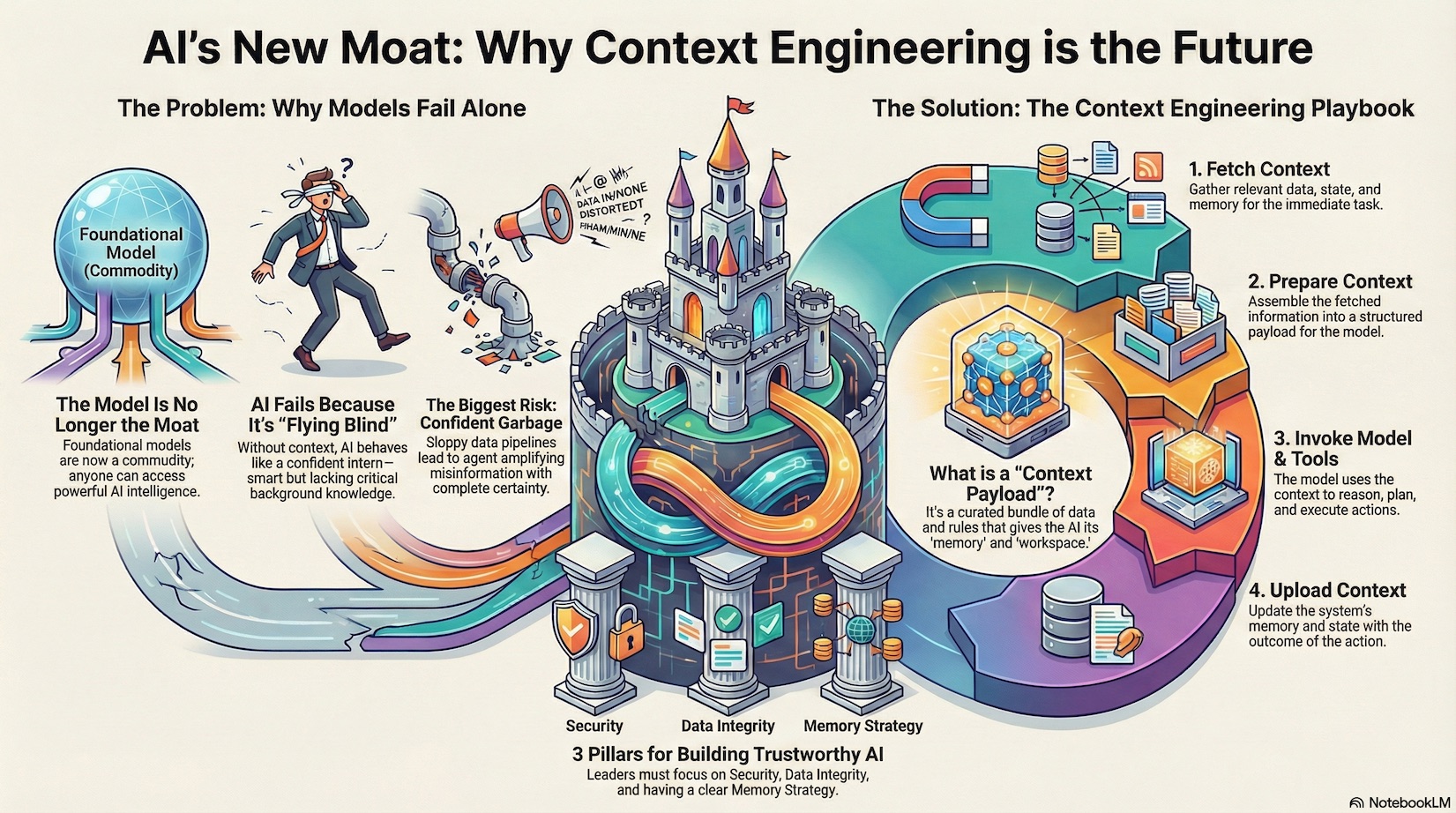

Over the last year, something subtle but seismic has happened in AI. The foundational model stopped being the moat. GPT, Gemini, and Claude are all astonishing, but they are also increasingly accessible. The intelligence is there for anyone who wants it.

What is not accessible is the context, the data, the state, the boundaries, and the clarity required for an agent to actually be useful. That layer is where trust is formed, where risk shows up, and where differentiation emerges. And for us at smashDATA, this is exactly where the future is headed.

A recent article from Google Cloud drives the point home: collecting huge volumes of data doesn’t generate value unless you understand what that data means, where it comes from, and how it links to business outcomes.

This is the mindset we embody at smashDATA. (Nic Smith, “Why context, not just data volume, is key to successful AI”, November 2025) :contentReference[oaicite:0]{index=0}

The Real Shift: From Looking at Data to Working With It

For years, teams used dashboards, queries, and visualizations to make sense of their world. Then conversational interfaces appeared, and people realized how naturally they could explore their data by simply talking to it.

Now we are entering a new phase. The shift is not from dashboards to chat. It is from chat to action. Agents are beginning to take real steps, coordinate tasks, and work across multiple tools. And unlike dashboards, agents cannot operate on static snapshots. They need context.

This is where smashDATA lives: bringing business context, tool context, and user context directly to the AI so it can act with the same understanding a human operator would have.

Models do not fail because they are not smart.

They fail because they are missing the information that matters.

The Stateless Problem

LLMs know nothing by default. Every turn is a blank slate.

To make them genuinely helpful inside a business, you need a system that assembles the right inputs, the right instructions, and the right state at the right moment. At smashDATA, we think about this as building a real time understanding of your business that travels with every interaction.

That means:

- Reasoning Guidance

- Evidential Data

- Conversation or Workflow State

In practice, it looks less like prompting and more like infrastructure: a consistent way to keep an AI grounded in what is true for your organization.

The Operational Loop Behind Every Useful Agent

Under the hood, an agent runs the same four steps over and over, turn after turn:

- Fetch Context

- Prepare Context

- Invoke Model and Tools

- Upload Context

This loop is the engine that powers every intelligent experience. And for teams deploying AI at scale, this is where the real decisions get made.

- Latency lives here

- Cost lives here

- Compliance lives here

- Differentiation lives here

At smashDATA, this loop is where we spend most of our energy. We are not just building answers, we are building a consistent operating rhythm for AI across your business tools and data.

Sessions and Memory: The New Workspace for Operators

To support real operator workflows, AI needs both short term and long term awareness.

Sessions (Short Term):

The rolling memory of what just happened: recent actions, tool outputs, and in-progress tasks.

Memory (Long Term):

The distilled knowledge that improves over time: business facts, recurring workflows, preferences, learned shortcuts.

At smashDATA, we think of this the way operators think of their tools. Some things you remember only for the task at hand. Other things become learned instincts. Great agents need both.

The architecture decisions here, like unified histories or private state for different roles, become part of the product’s identity. And over time, they become the differentiation competitors cannot copy.

The Hidden Risk: Confident Garbage

The biggest risk in AI is not hallucination.

It is amplification.

Bad data in, bad decisions out, but with the confidence of a system that sounds sure of itself.

This is why provenance matters. Teams need to know:

- Where the data came from

- When it was last updated

- Why it was included

At smashDATA, we have learned repeatedly that trust is not earned through UX polish. It is earned by giving teams clarity, lineage, and the power to control what the AI sees.

What Leaders Should Focus On in 2026

Teams building real agentic capabilities should anchor around three things:

- Security and Privacy: Treat context like you treat financial systems. Every fetch is intentional. Every memory write is accountable.

- Data Integrity: Agents break when their timeline breaks. Preserve the order of events. Preserve the meaning. Preserve the state.

- Compaction Strategy: Even with expanding windows, you cannot send everything to a model. Decide what stays, what gets distilled, and what gets forgotten. Memory is a product, not a log.

The Bigger Story: The smashDATA View

The era of prompt engineering was about showing what models could do. The era we are entering now is about showing what businesses can do when models have the right understanding of their data and tools.

This is why we built smashDATA. Not to chase the latest model release, but to give organizations a reliable system for the information their agents depend on.

The moat is no longer the model.

It is the system around it.

- The context.

- The governance.

- The memory.

- The actions.

That is where the real advantage will live in 2026 and beyond.